The use of AI in everyday life has become commonplace in recent years with the safety concerns that come along with it not receiving as much attention. Recent events have shown consequences when proper measures are not taken to ensure the safe usage of AI.

AI is a technology that is not yet fully understood. Even the researchers who are advancing the technology don’t have a complete understanding of its implications.

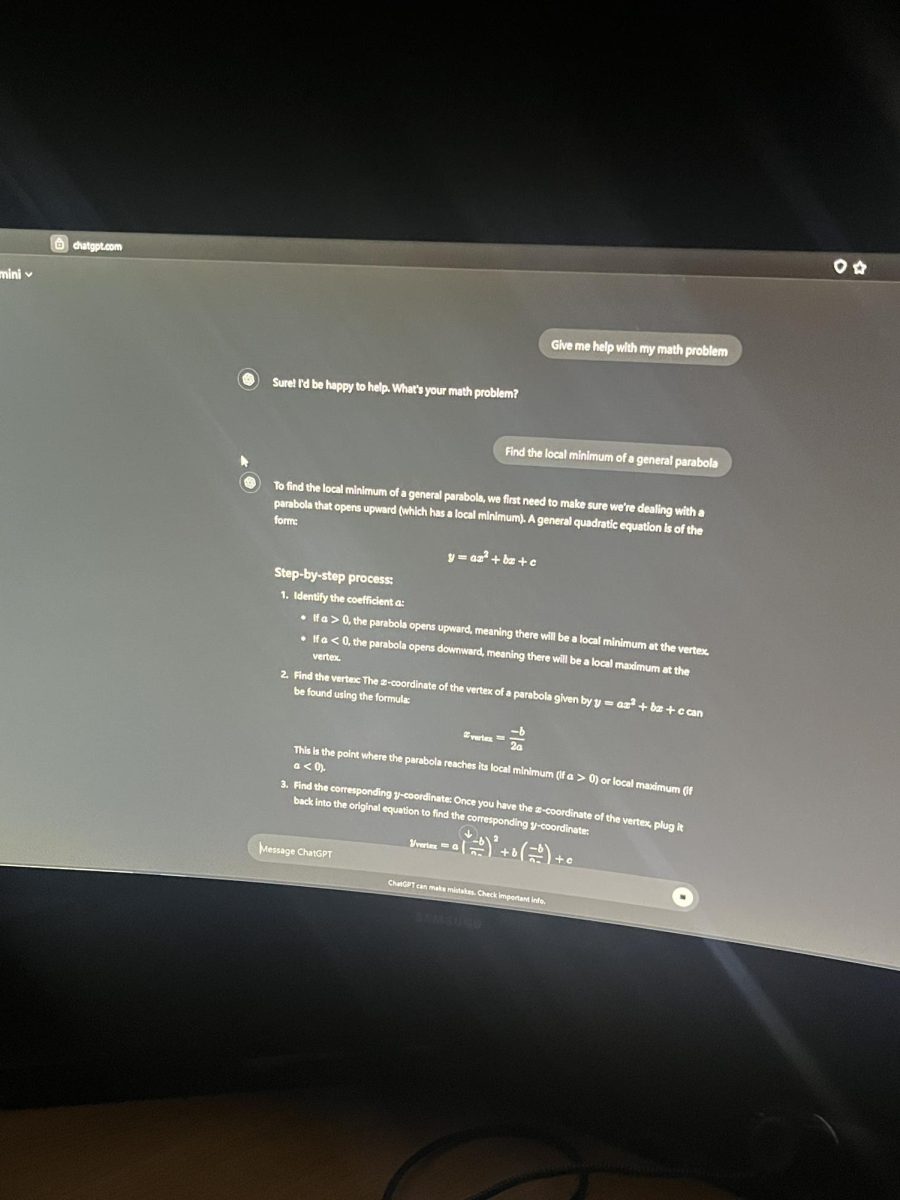

Regular people especially understand even less the technology and how to safely use it. “I have no clue how all of these AI tools work,” said senior Grant Jaques. “All I know is that it just generates text for me.”

Despite these risky knowledge gaps, investors have been putting large sums of money into the AI industry. AI continues to integrate into almost every aspect of life. As the safety implications are not yet known, oftentimes the proper precautions are not taken.

An unfortunate example of this is a company called Character.AI, an AI chatbot company that did not implement the proper safety measures with the AI they were using like content filtering.

Character.AI’s chatbot began outputting very harmful content. Unfortunately one of the many people to receive this harmful content was a teen who was not in a solid mental state, believing he was in a relationship with the chatbot. The harmful content from the chatbot led him to take his own life.

“I have noticed that even with some of the ‘better’ AI tools, they often will provide harmful content,” said senior Reetham Gubba.

Shortly after his passing, a lawsuit was filed against Character.AI and its creators for failing to ensure the safety of their product. The boy’s mother aims to find justice for the loss of her son and to raise awareness about what needs to be done when it comes to safe usage of AI.

This very unfortunate event highlighted the dire need for better content filtering and safety precautions regarding AI. Character.AI has already put out a statement regarding their plans to introduce a safer experience for users under 18.

Additionally this event has brought to light the realization that AI is nothing more than a text generation tool can be very harmful. “There are many things that are good to use AI for, but it is important to set boundaries with it to keep oneself safe,” said Gubba.