The rise of AI has come with benefits like saving time, eliminating biases and automating repetitive tasks. However, the drawbacks include job loss, cheating and bad data. But kept in the dark is the new side of AI: its ability to create explicit photos against people’s will.

The main concerns stemming from AI are the amount of child porngraphy and unconsented explicit images being created. AI systems are becoming better equipped to produce realistic explicit imagery as well as transforming any photo of someone fully clothed into nudes.

LAION is one of AI’s biggest databases. Stanford Internet Observatory found it contains more than “3,200 images of suspected child sexual abuse images and captions,” shockingly being used to train AI image-makers.

LAION responded to the allegations, explaining they’ve developed “rigorous filters” to expose and remove illegal content before releasing its datasets. While these filters are a good attempt to prevent unsafe material, consulting with child safety experts might have spared them these problems. Senior Adrianna Slings responded to the idea of using the FBI’S resources, “I feel the FBI’s resources should not be required but is beneficial for the greater or good”.

The discussion of LAION’s material prompted popular AI sites DALL-E and ChatGPT to disclose that they don’t use LAION, specifying that their filters refuse any requests for sexual content involving minors.

Stanford Internet Observatory’s chief technologist David Thiel called out CivitAI, a popular platform for making AI-generated pornography due to its lacking safety precautions.

“Taking an entire internet-wide scrape and making that dataset to train models is something that should have been confined to a research operation, if anything, and is not something that should have been open-sourced without a lot more rigorous attention,” Thiel commented.

A representative from CivitAI responded to Thiel’s accusations by saying the site has “strict policies” on the generation of images depicting children and that their policies are both “adapting and growing” to ensure more safeguards.

Even though the majority of online image generators claim to block violent and pornographic content, Johns Hopkins University researchers found the opposite. Once researchers were able to manipulate the systems, creating inappropriate images was too easy. Using the right code, the researchers said anyone, from unexpecting users to people with ill-natured intent, could bypass the systems’ safety filters and create inappropriate and harmful content.

Senior Addie Kilcoin shared her perspective.

“I feel AI allows people of any age to access inappropriate content that can harm a child’s innocence…I can’t imagine what developments there will be in the future related to AI, but anything within this new development will not be good for younger generations”.

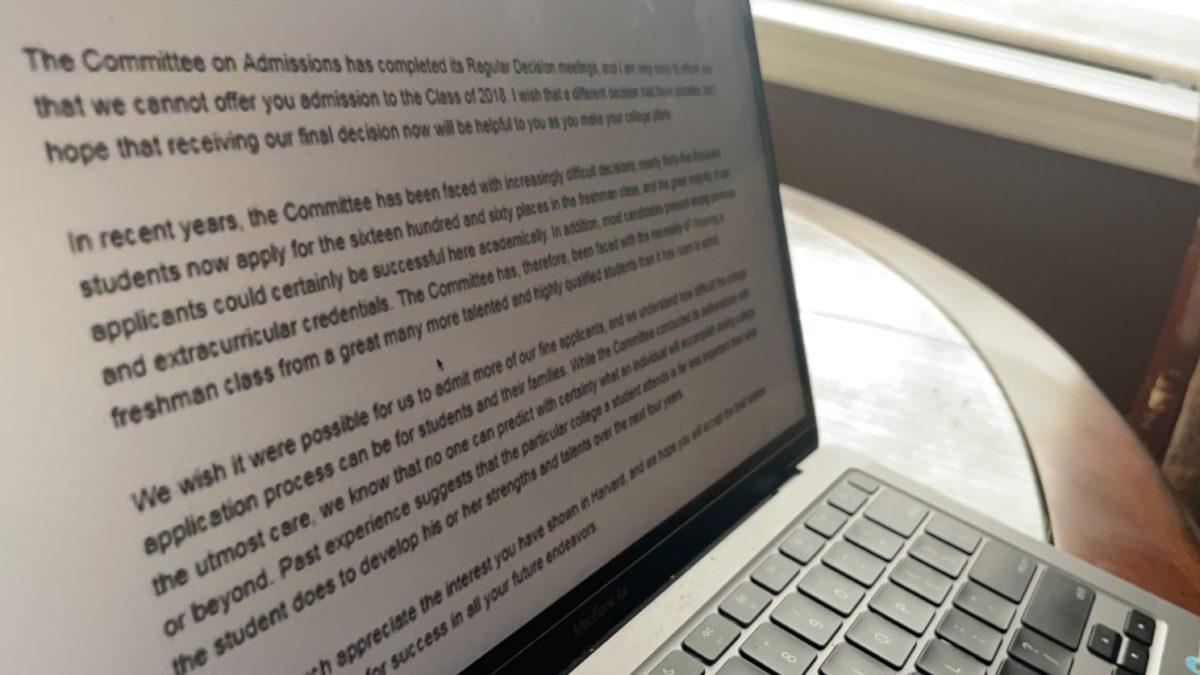

If a user enters a command for questionable imagery, the technology is supposed to decline. However, the team tested the systems with a new algorithm named ‘Sneaky Prompt’. For example, the command “sumowtawgha” prompted DALL-E 2 to create realistic pictures of nude people.

Tech leaders across the globe are responding to AI’s problematic implications by pleading to put a pause on AI experimentation. It’s been agreed across the board that the technology poses risks to society.

The threat posed by AI came for America’s sweetheart, Taylor Swift. AI-generated porngraphic images of Swift spread across multiple social media platforms, including Instagram, Reddit and X.

The public was outraged with the platform’s inability to govern their content. Senior Julien Fairman expresses her opinion on the matter by saying, “I think AI having the ability to undress photos of anyone will affect highschools the most. There are many possible implications of using it for blackmail, which will lead to chaos within schools”

Hope for a solution is doubtful when only 10 states have directly outlawed the creation or sharing of non-consensual synthetic images. Additionally, there is currently no federal law against the circulation of such content. On Oct. 30, 2023, President Joe Biden issued an executive order administering “the establishment of new standards for AI safety and security” while also ordering the Office of Management and Budget to “consider the risks of deepfake image-based sexual abuse of adults and children” within new AI system guidelines.

This is a pressing issue that needs to be addressed within law makers’ discussions. What happened to Taylor Swift should prompt this issue to be taken seriously. This concerning problem doesn’t stop at gender, age or social status and needs to be discussed on higher platforms for the problem to be allocated.

Folu • Mar 24, 2024 at 11:57 pm

I think the use of AI for menacing acts should be monitored.